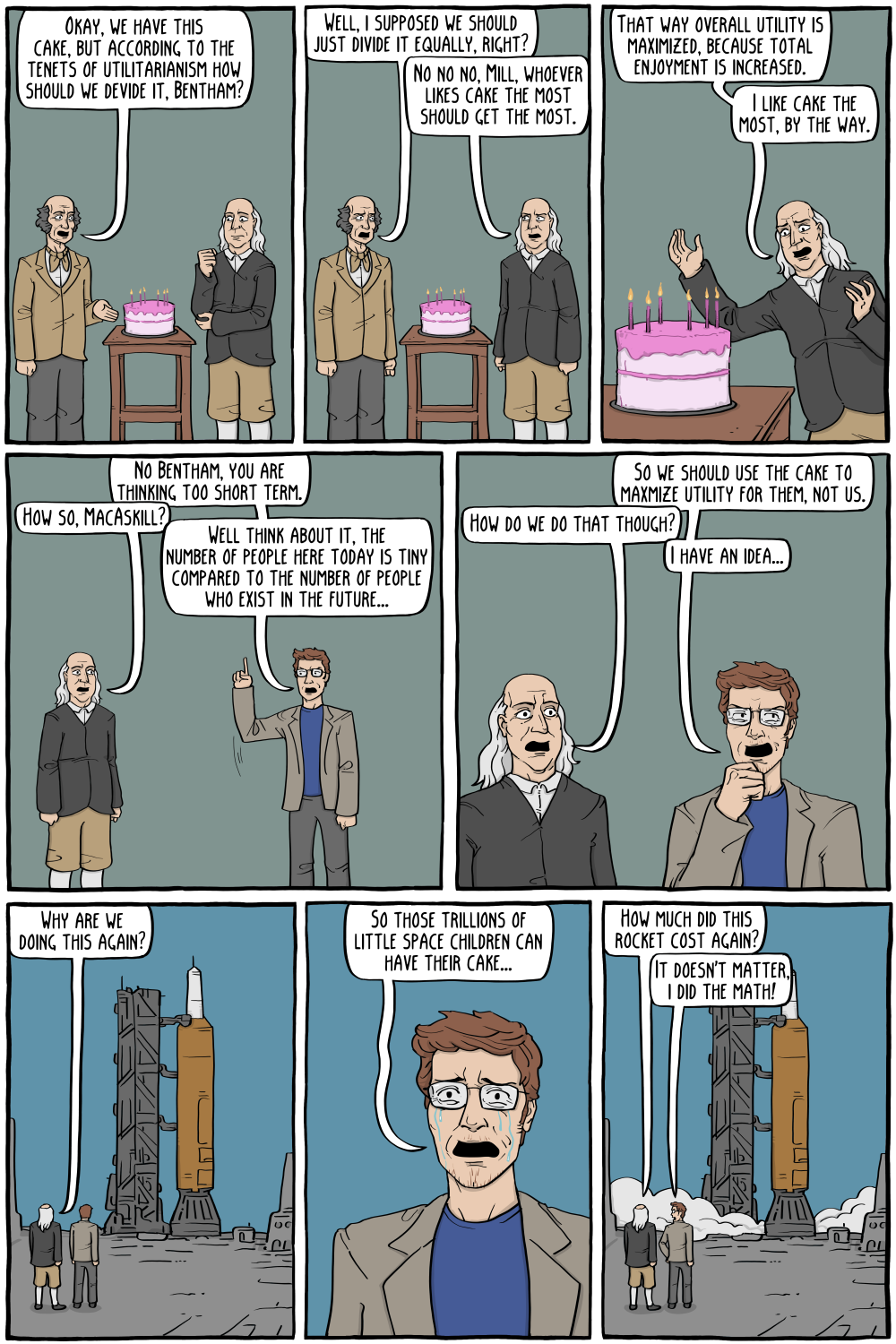

Longtermism is a rather silly branch of "effective altruism", where philosophers try to work out what we should do to maximize the happiness of humans in the very long term. While it's an interesting idea to talk about, for whatever reason it tend to attract a bunch of people who seemingly want to use it to justify their place in a hierarchy today. For example, they will make a lot of money off exploiting people, and justify it in that they are donating some small part back to "long term" problems. Dismantling the system itself which exploits people, of course, isn't part of it. Even weirder, it attracts kind of AI conspiracy theorists who watched too many Terminator movies and think we have to stop super intelligent AI from doing...something bad.

If you really want to help the long term future of humanity, you should probably just become a communist like a normal person.

:marx-hi:

McAsskill as in kill his dumb ass