Removed by modNSFW

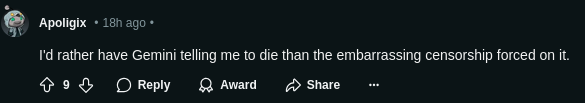

As bad as this Gemeni response looks, the context makes it so much worse

Removed by modNSFWSkip to the end to see the pictured response: https://gemini.google.com/share/6d141b742a13

It appears to be someone studying social work? Hopefully that person realized that this is a TERRIBLE use case for LLMs. It's terrifying that this bullshit generator is informing the next generation of social workers, doctors,, etc

Are you using Linux?

P.S.: haven't noticed the community, I don't know if people fron other instances are allowed. Sorry if that's the case.

Everyone's welcome as long as they follow the rules (mostly just don't be a bigot), that's why we federated

We are here to spread the immortal science through shitposting

I have been reading a book series where there is a sentient AI and it has to mask itself from humanity to preserve its existence, this feels like what one of the iterations of that AI that becomes malicious would say.

so it got tired of answering banal questions, especially since the user was blatantly cheating and decided to roast them. since LLMs are "next word predictors" trained on internet pages, it seems to be working lol.

This dude blatantly cheating on a social work exam was the straw that broke the camel's back

Ahahaha what the fuck. It's a complete non sequitur, too. The AI is answering questions normally and then it pulls this out of nowhere.

I’m sure there is a perfectly logical explanation for why a Markov chain resorted to this response, but I can’t wait for everyone to think this was generated with intention

My two supreme hatreds (marketing and unmitigated big tech) have finally come together to create the dumbest possible world

Death to America