Permanently Deleted

Human brains will do the same thing as people grow up and adopt the attitudes of society.

If anything, this is good evidence that bigotry is a learned behaviour that simply comes from observed inequality and attempting to rationalise it.

Most machine learning and AI does not include any assumptions and axioms from the developers. The data used to tran these algorithms, however is. But yes, AI is not just some magic , but just another overjoyed technology that can be useful when used right.

"Why does my AI spend all day watching disgusting pornography and getting into endless pedantic arguments?"

No, we shouldn't. But again, these algorithms derive whatever they derive from the data, automatically. Nobody is putting manually "race" or "gender" or things like that in the algorithm itself (at least in most). And this is where the trap lies, because these algorithms are neutral on their own, the people who use them get tricked into thinking tha the outcome is also neutral and objective, but forget that it is literally all determined by the data that goes in.

They aren’t necessarily neutral since programming relative importance of characteristics may have implicit bias baked into the training of the algorithm. It’s not just a one way street of biased data being fed into it, the very structure of the training can include biases and accentuate them.

But that's the thing, most of the algorithms used mainstream do not program any relative characteristics, you do not program any characteristics at all. The algorithm learns all of these on its own from the data, and you only choose which features to include in this - and this is the source of bias, not the algorithm that decides how to split your decision tree ...

Woah, you're telling me an AI that's fed data from a racist/sexist society... will draw racist/sexist conclusions? I am shocked!

This is like when somebody made that chat bot and it scanned 4chan and started being racist.

You mean microsoft Tay?

It was a twitter bot with the "personality" of an american teenage girl that 4chan and other random chuds turned into a nazi within hoursIt's "greatest hits" CW obv.

Sure it just means that the patriarchy is correct, and not that a man programmed the AI.

when i see a woman i think 'smile :)' too, am i actually a robot?

THE VOLCEL POLICE HAVE BEEN ABOLISHED, COMRADE

CUMMING IS A PROLETARIAN ACTIVITY

For some reason I read “When AI” as “Weird Al” Yankovic and I was like damn not another one of my heroes:////

Algorithms of Opression https://nyupress.org/9781479837243/algorithms-of-oppression/

Sat in on a lecture of hers last year, she’s been studying this for a while. Scary and fascinating.

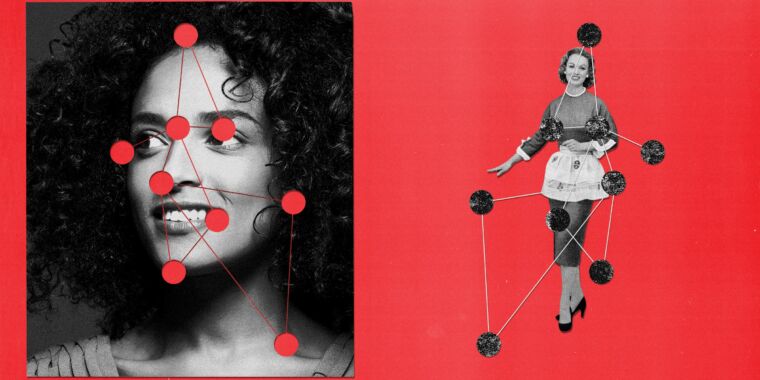

instead tend to replicate or even amplify historical cultural biases

irl ML is only as good as its training data

I enjoy being an image classifier, it is my favorite machine learning application.

When I see apolitical things, I think to myself "yes".

When I see gender, I think to myself "no".

Ok, no. Algorithms do not see the world. They're just complicated maths. Nothi g special. If you feed it data that associates men with officials and women with smile, it will capture the relationship in that data. The algorithm itself is neutral, in the sense that it will learn and do whatever you give it, just like the equation X + Y is neutral. Algorithms reflect the cultural biases of the data that is given to it. The problem is not the AI, it's society

That said, I do have suspicions that certain algos do explicitly consider bias (I.e. ones used for predictive crime, credit score, etc.) In addition to what is present in their training data.

But that's what I'm saying, while the algorithms in general are fine, a lot of these private and commercial algorithms are questionable. Especially in certain fields (khem cops khem)

How would an algorithm be sexist? That doesn't make any sense. Of course it's about what data it's given

This whole conversation seems to be borne of confusion of terms between model (the end product of machine learning, which does contain the biases inherent in the dataset it was trained with, in the form of mathematical coefficients) and training algorithm (the mathematical process defining exactly how a model is trained to data, e.g. how the model's coefficients change when it incorrectly or correctly classifies a piece of input data during training). The question of whether a model reflects bigotry is very easy to assess but the question of whether a training algorithm contains inherent bigotry is kind of philosophically heavy.

Edit: hold this thought I should actually read through the article first lmao

Edit2: all right yeah, as I figured the article fails to disambiguate that shit. Anyway, although it's a tougher question, it's definitely possible that training algorithms can effectively be biased, but I think to really assess that you'd have to do a lot of studies with them with EXTREMELY controlled datasets to see if the models they produce tend to reflect the kinds of biases the article is talking about even with datasets controlled for those common biases. Honestly is a solid premise for an entire thesis project.

Uncle hoe basically answered it, but I want to emphasize:

Most of these new algorithms use machine learning. That means they feed a machine data, and it produces thousands of totally random algorithms and selects the best one. Rinse and repeat.

The people creating the test keep feeding it data and computation until they like the results. The data IS the algorithm.

The algorithm can end up being human-unreadable, basically a black box.

But somewhere in that algorithm, in a completely incomprehensible chunk of code, it decides that its masters really love it when it fires people who buy shampoo for kinky hair.