This should be a popular opinion honestly, because it's correct.

It shouldn't be taken as scientific truth but it can help you know yourself and others better, and it's an insult to compare it to astrology because at least it's not based on completely random things like the position of the planets when you were born. The issue is that most people only know MBTI as online tests, which are self-report and have extremely vague and stereotypical questions that can very easily be manipulated to get whatever result you want, with the worst offender being the most popular one, 16personalities, which isn't even an actual MBTI test but a BIg 5 one (which is not to say Big 5 is bad, but it's very misleading to map it to MBTI types). In reality to use MBTI somewhat effectively is going to take studying Carl Jung's work, how MBTI builds on that, lots of introspection, asking people about yourself, and lots of doubting and double checking your thinking. And very importantly you have to accept that in the end this all isn't real and just a way to conceptualize different aspects of our personalities and it's in no way predictive, you have to let go of stereotypes, anyone can act in any way, it's just about tendencies.

Copyright is far too long and should only last at most 20 years.

Actually, George Washington would agree with me if he was still alive. He and the other founding fathers created the notion of copyright, which was to last 14 years. Then big corporations changed the laws in their favor.

Totally agree. "Intellectual property" shouldn't be a thing. Yes, writing a novel or recording a song is work, but so is building a house. Craftspersons don't get royalties from people using the widgets that they make; they get paid only for the first sale of the product.

That said, intangibles like written and recorded media are qualitatively different, in that they can be effortlessly copied. Without some sort of legal protection, creators wouldn't be able to profit from even that first sale. A limited-term copyright is an okay compromise.

But now that corporations can "own" intangible works nearly indefinitely, they're getting greedy, and are applying that to physical objects that they sell through the subscription model. And it's bullshit.

Yes, absolutely, roll back copyright terms to 14 years.

Copyright and proprietariness will vanish in a better society

Pitbulls are not more genetically predisposed towards biting or mauling than other breeds and the supposed "statistical data" on the subject is based around a confluence of inaccurate metrics caused by 1) people not being very good at accurately identifying dog breeds, 2) existing groups that hate pitbulls pushing bad statistics for political purposes, and 3) a self-fulfilling prophecy of pitbulls having a bad reputation and actively being sought out by people who want vicious dogs and who will treat their dogs in such a way as to encourage that behavior. And I say all of this as someone who does not own a pitbull and probably never will.

but on the other hand anglos literally bred pit bulls to tear cattle apart while they were still alive (and it was actually illegal to slaughter cows in england using normal methods)

I'm not saying pitbulls necessarily are genetically like that but I wouldn't be surprised.

But regardless, let us appreciate the fact that 1800s era brits thought that the only appropriate way to consume beef was by slaughtering it with a dog ripping it's muscle off its back while tied to a pole. Even being killed by a pack of wolves or a lion would be more humane lol

- a self-fulfilling prophecy of pitbulls having a bad reputation and actively being sought out by people who want vicious dogs and who will treat their dogs in such a way as to encourage that behavior.

I'm pretty neutral on dog genetics but tbh it still ends you at the same conclusion; not everyone should just be able to get the dog that kills you

Sure, I generally agree with that, but that being the case we should talk about dogs purely in terms of size and weight. Big dogs (and I mean ALL big dogs) are dangerous for the same reason big people are dangerous (potentially) - it's why weight classes exist in competitive martial arts.

Add into this people who love pits and own them, but also believe they will "turn," and so constantly give their dogs subtle cues to be on edge, stressed, and like something is wrong. They're no more prone to dangerous actions than any other breed, they're just very, very intelligent dogs that learn how to react to their surroundings. The myth of the aggressive pit is what causes the aggressive pit. We need real education on dogs in general, because that Labrador you love or the poodle who was your best friend when you were a kid is just as capable of snapping or "turning." All dogs can bite, and all breeds can be sweet and well behaved.

Disruptive protest, no matter how annoying, is valid and should be protected under law. When the government moves to ban protest and dissent, they've crossed the line into authoritarianism.

The right to protest is a fundamental of democracy, and we should not accept any erosion of the fundamentals of democracy.

What do you mean by "protected under the law"? And what constitutes disruptive protest?

Then it should be lawful to manually get the protesters off of the road.

You mean like the Nazi in Charlottesville tried to do?

Go seek help before you kill somebody.

Nah, I don't want to kill anyone, I don't even own a car. I just made fun of something I thought was ridiculous with something even more ridiculous.

I personally never even saw such a protest, but I think it's very ridiculous to "protect by law" blocking people, that maybe even agree with you, from reaching the cemetery, weddings, or other important events on time.

Edit: btw why is everyone attaching images that take up half my phone's screen and make their comments hard to read? Is this some new trend?

If protests arent disruptive they're effectively politcally-charged social gatherings.

And no, you dont get to just "Move people out of the way" so you can get to your event, job, or whatever. Protestors do get run over - its not funny or ridiculous, it's fucking pathetic.

Yep, fully agree.

What I meant was ridiculous was suggesting something that pathetic.

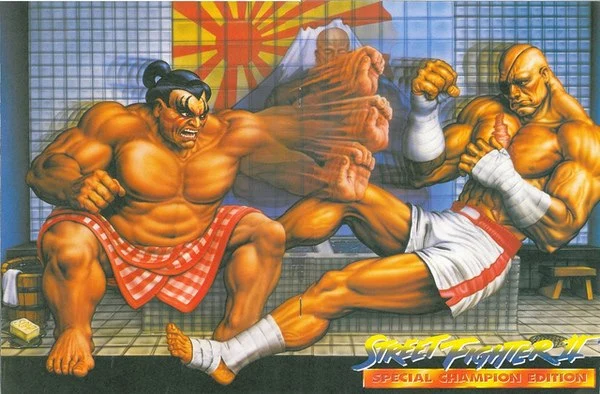

if you wanna run over people that bad, why not try playing GTA?

Hope you're okay with taking in your last sights through a spiderweb of punctured glass

Piracy equals culture preservation in an age of subscription services.

I suppose all the people standing in front of you are record label executives then

No of course not.

I still pay for things I can actually own, however subscription services routinely change, limit or simple remove items that you supposedly bought.

What we're currently calling AI isn't AI but just a language processing system that takes its best guess at a response from it's database of information they pilfered from the internet like a more sophisticated Google.

It can't really think for itself and it's answers can be completely wrong. There's nothing intelligent about it.

I hate having to explain this shit to literal Comp Sci majors.

Even if ChatGPT was literally a perfect copy of a human being it would still be 0 steps closer to a general intelligence because it does not fucking understand WHAT or HOW to actually do the things it suggests.

Even if ChatGPT was literally a perfect copy of a human being it would still be 0 steps closer to a general intelligence because it does not fucking understand WHAT or HOW to actually do the things it suggests.

What if we AI passes the turing test not because computers got intelligent but because people got dumber

Indeed, but it's not the popular opinion in the general public and it's currently the biggest buzzword in tech even if it's wrong. People are throwing serious money at "AI" even if it isn't.

This whole open AI has Artificial General Intelligence but they're keeping it secret! is like saying Microsoft had Chat GPT 20 years ago with Clippy.

Humans don't even know what intelligence is, the thing we invented to try to measure who's got the best brains - we literally don't even have scientific definition of the word, much less the ability to test it - so we definitely can't program it. We are a veeeeerry long way from even understanding how thoughts and memories work; and the thing we're calling "general intelligence" ? We have no fucking idea what that even means; there's no way a bunch of computer scientists can feed enough Internet to a ML algorithm to "invent" it. (No shade, those peepos are smart - but understanding wtf intelligence is isn't going to come from them.)

One caveat tho: while I don't think we're close to AGI, I do think we're very close to being able to fake it. Going from Chat GPT to something that we can pretend is actual AI is really just a matter of whether we, as humans, are willing to believe it.

For the record comp sci major here.

So I understand all that but my counter point: can we prove by empirical measure that humans operate in a way that is significantly different? (If there is, I would love to know because I was cornered by a similar talking point when making a similar argument some weeks ago)

Can you make a logical decision on your own even when you don't have all the facts?

The current version of AI cannot, it makes guesses based on how we've programmed it, just like every other computer program.

I fail to see the distinction between "making a logical decision without all the facts" and "make guesses based on how [you've been programmed]". Literally what is the difference?

I'll concede that human intelligence is several orders more powerful, can act upon a wider space of stimuli, and can do it at a fraction of the energy efficiency. That definitely sets it apart. But I disagree that it's the only "true" form of intelligence.

Intelligence is the ability to accumulate new information (i.e. memorize patterns) and apply that information to respond to novel situations. That's exactly what AI does. It is intelligence. Underwhelming intelligence, but nonetheless intelligence. The method of implementation, the input/output space, and the matter of degree are irrelevant.

It's not just about storage and retrieval of information but also about how (and if) the entity understands the information and can interpret it. This is why an AI still struggles to drive a car because it doesn't actually understand the difference between a small child and a speedbump.

Meanwhile, a simple insect can interpret stimulus information and independently make its own decisions without assistance or having to be pre-programmed by an intelligent being on how to react. An insect can even set its own goals based on that information, like acquiring food or avoiding predators. The insect does all of this because it is intelligent.

In contrast to the insect, an AI like ChatGPT is not anymore intelligent than a calculator, as it relies on an intelligent being to understand the subject and formulate the right stimulus in the first place. Then its result is simply an informed guess at best, there's no understanding like an insect has that it needs to zig zag in a particular way because it wants to avoid getting eaten by predators. Rather, AI as we know it today is really just a very good information retrieval system and not intelligent at all.

"Understanding" and "interpretation" are themselves nothing more than emergent properties of advanced pattern recognition.

I find it interesting that you bring up insects as your proof of how they differ from artificial intelligence. To me, they are among nature's most demonstrably clockwork creatures. I find some of their rather predictable "decisions" to some kinds of stimuli to be evidence that they aren't so different from an AI that responds "without thinking".

The way you can tease out a response from ChatGPT by leading it by the nose with very specifically worded prompts, or put it on the spot to hallucinate facts that are untrue is, in my mind, no different than how so-called "intelligent" insects can be stopped in their tracks by a harmless line of Sharpie ink, or be made to death spiral with a faulty pheromone trail, or to thrust themselves into the electrified jaws of a bug zapper. In both cases their inner machinations are fundamentally reactionary and thus exploitable.

Stimulus in, action out. Just needs to pass through some wiring that maps the I/O. Whether that wiring is fleshy or metallic doesn't matter. Any notion of the wiring "thinking" is merely anthropomorphism.

You said it yourself; you as an intelligent being must tease out whatever response you seek out of CharGPT by providing it with the correct stimuli. An insect operates autonomously, even if in simple or predictable ways. The two are very different ways of responding to stimuli even if the results seem similar.

The only difference you seem to be highlighting here is that an AI like ChatGPT is only active when queried while an insect is "always on". I find this to be an entirely irrelevant detail to the question of whether either one meets criteria of intelligence.

I have to say no, I can't.

The best decision I could make is a guess based on the logic I've determined from my own experiences that I would then compare and contrast to the current input.

I will say that "current input" for humans seems to be more broad than what is achievable for AI and the underlying mechanism that lets us assemble our training set (read as: past experiences) into useful and usable models appears to be more robust than current tech, but to the best of my ability to explain it, this appears to be a comparable operation to what is happening with the current iterations of LLM/AI.

Ninjaedit: spelling

If you can't make logical decisions then how are you a comp sci major?

Seriously though, the point is that when making decisions you as a human understand a lot of the ramifications of them and can use your own logic to make the best decision you can. You are able to make much more flexible decisions and exercise caution when you're unsure. This is actual intelligence at work.

A language processing system has to have it's prompt framed in the right way, it has to have knowledge in its database about it and it only responds in a way that it's programmed to do so. It doesn't understand the ramifications of what it puts out.

The two "systems" are vastly different in both their capabilities and output. Even in image processing AI absolutely sucks at driving a car for instance, whereas most humans can do it safely with little thought.

and exercise caution when you're unsure

I don't think that fully encapsulates a counter point, but I think that has the beginnings of a solid counter point to the argument I've laid out above (again, it's not one I actually devised, just one that really put me on my heels).

The ability to recognize when it's out of its depth does not appear to be something modern "AI" can handle.

As I chew on it, I can't help but wonder what it would take to have AI recognize that. It doesn't feel like it should be difficult to have a series of nodes along the information processing matrix to track "confidence levels". Though, I suppose that's kind of what is happening when the creators of these projects try to keep their projects from processing controversial topics. It's my understanding those instances act as something of a short circuit where (if you will) when confidence "that I'm allowed to walk about this" drops below a certain level, the AI will spit out a canned response vs actually attempting to process input against the model.

The above is intended ad more a brain dump than a coherent argument. You've given me something to chew on, and for that I thank you!

Well, it's an online forum and I'm responding while getting dressed and traveling to an appointment, so concise responses is what you're gonna get. In a way it's interesting that I can multitask all of these complex tasks reasonably effortlessly, something else an existing AI cannot do.

You are ~30 trillion cells all operating concurrently with one another. Are you suggesting that is in any way similar to a Turing machine?

Yes? I think that depends on your specific definition and requirements of a turing machine, but I think it's fair to compare the almagomation of cells that is me to the "AI" LLM programs of today.

While I do think that the complexity of input, output, and "memory" of LLM AI's is limited in current iterations (and thus makes it feel like a far comparison to "human" intelligence), I do think the underlying process is fundamentally comparable.

The things that make me "intelligent" are just a robust set of memories, lessons, and habits that allow me to assimilate new information and experiences in a way that makes sense to (most of) the people around me. (This is abstracting away that this process is largely governed by chemical reactions, but considering consciousness appears to be just a particularly complicated chemistry problem reinforces the point I'm trying to make, I think).

My definition of a Turing machine? I'm not sure you know what Turing machines are. It's a general purpose computer, described in principle. And, in principle, a computer can only carry out one task at a time. Modern computers are fast, they may have several CPUs stitched together and operating in tandem, but they are still fundamentally limited by this. Bodies don't work like that. Every part of them is constantly reacting to it's environment and it's neighboring cells - concurrently.

You are essentially saying, "Well, the hardware of the human body is very complex, and this software is(n't quite as) complex; so the same sort of phenomenon must be taking place." That's absurd. You're making a lopsided comparison between two very different physical systems. Why should the machine we built for doing sums just so happen to reproduce a phenomena we still don't fully understand?

Thats not what I intended to communicate.

I feel the Turing machine portion is not particularly relevant to the larger point. Not to belabor the point, but to be as clear as I can be: I don't think nor intend to communicate that humans operate in the same way as a computer; I don't mean to say that we have a CPU that handles instructions in a (more or less) one at a time fashion with specific arguments that determine flow of data as a computer would do with Assembly Instructions. I agree that anyone arguing human brains work like that are missing a lot in both neuroscience and computer science.

The part I mean to focus on is the models of how AIs learn, specifically in neutral networks. There might be some merit in likening a cell to a transistor/switch/logic gate for some analogies, but for the purposes of talking about AI, I think comparing a brain cell to a node in a neutral network is most useful.

The individual nodes in neutral network will have minimal impact on converting input to output, yet each one does influence the processing of one to the other. Iand with the way we train AI, how each node tweaks the result will depend solely on the past I put that has been given to it.

In the same way, when met with a situation, our brains will process information in a comparable way: that is, any given input will be processed by a practically uncountable amount of neurons, each influencing our reactions (emotional, physical, chemical, etc) in miniscule ways based on how our past experiences have "treated" those individual neurons.

In that way, I would argue that the processes by which AI are trained and operated are comparable to that of the human mind, though they do seem to lack complexity.

Ninjaedit: I should proofread my post before submitting it.

I agree that there are similarities in how groups of nerve cells process information and how neural networks are trained, but I'm hesitant to say that's a whole picture of the human mind. Modern anesthesiology suggests microtubuals, structures within cells, also play a function in cognition.

Right.

I don't mean to say that the mechanism by which human brains learn and the mechanism by which AI is trained are 1:1 directly comparable.

I do mean to say that the process looks pretty similar.

My knee jerk reaction is to analogize it as comparing a fish swimming to a bird flying. Sure there are some important distinctions (e.g. bird's need to generate lift while fish can rely on buoyancy) but in general, the two do look pretty similar (i.e. they both take a fluid medium and push it to generate thrust).

And so with that, it feels fair to say that learning, that the storage and retrieval of memories/experiences, and that the way that that stored information shapes our sub-concious (and probably conscious too) reactions to the world around us seems largely comparable to the processes that underlie the training of "AI" and LLMs.

I don't think this position qualifies for that meme because there are legions of people who agree.

That's still called an AI. Models under ANN umbrella imitates a nerve cell. What you're talking about is AGI.

We accept that it's unhealthy to be a shut-in and never leave your house, never interact with others if you can help it, but equally unhealthy is this sort of toxic extroversion where the thought of being alone with yourself for even a few hours is torture to you. If this is you, clearly you have some shit you need to work out that you're avoiding. Take a week off from the internet and going out. Don't talk to anyone. Maybe go camping alone for a couple days. You will survive, and be much more comfortable with yourself for it.

ok but who is this about? sometimes people get bored alone but I've never seen someone dread it like this.

I have. Maybe it's unkind to say it but they're kind of a drain on everyone around them. They can't even go to sleep without a podcast or the TV on.

That second part feels unrelated. I know a lot of extreme introverts that need something going to fall asleep.

Maybe you will meet someone like that and maybe you won't I don't know what to say besides the fact that they definitely exist and you will know it when you see it.

Teachers should be paid 50% more. If you want good teachers to stay, you have to walk the walk, otherwise you'll get a perpetual cycle of overwhelmed grads being bossed around by rusted-on bottom teer heads.

The starting salary for teachers is $85,000 and the for a top of the scale teacher it's $122,100 (~$80k in yank dollars).

The rusted-on people are still an issue

50% more than what? They get paid different amounts in different countries.

Continents are a hoax.

There's only 4 big islands: Afro-Eurasia, America, Antarctica, and Australia.

Who tf considers Europe a continent? Is this some American thing? I think everyone in Europe knows Europe and Asia are just parts of the world and Eurasia is the continent.

It might just be an American thing, but it's what I've always been taught.

The vast majority of humans are actually nice, altruistic and not selfish if you treat them with respect. And hence anarchism would not resolve in everyone killing each other.

What would an anarchistic world even look like? The first thing that would happen if society collapsed is local communities gathering into "tribes" which just expand and develop until we get to where we are. Humans are natural pack animals would gravitate towards a structured community.

It's funny how you assume that structured can only happen with violence. You're right, an advanced anarchist society would be a real democracy (not a representative democracy like we have today). It would in fact be way more structured than societies today. If a small group of people can't simply enforce rules on all the others, the bodies that make decisions for the group will have to do a lot more work to make sure they are including everyone in the conversation in order to avoid conflict. It would involve a lot more conversation, deliberation and balancing than our current societies.

Read The Dispossessed by Ursula K. Le Guin for an indication of a functioning anarchist society years after it was established. Amazing book.

All drugs should be legalized. Not quiet the whole World but a large portion.

Doing drugs should be decriminalized, but not legal. Ideally when someone is found addicted to drugs they would be provided help rather than punishment. Selling drugs should remain criminal but consequences should be determined based on the amount found selling and to who (like a child or someone who's pregnant would be a higher penalty at the discretion of the court), legalizing would just give a tax incentive for pushing drugs similar to gambling.

Edit: I want to clarify, I'm talking about addictive drugs with known negative health effects like meth. Weed can be legal, who cares.

The depends on the material and the recycler. Metals, glass, organic waste, paper, and car battery recycling is pretty good. South Korea, and several European nations have really efficient recycling programs. Even some plastics like PET are easy to recycle. The issue is that a lot of plastics make it very difficult to recycle especially if they are embedded into another material or are specialized for their usecase and most places have trash recycling programs pun intended.

the best thing to do with your car battery is throw it in the ocean. The electric eels use it to recharge.

Plastic recycling is the biggest scam of them all, they ship tonnes of it all over the world. PET gets "recycled" once. Ship around the world, Get some fleece clothes back which can't be recycled.

For non plastic:

Most my packaging materials are going in the fireplace. That way a get energy back.

instead of A, clean it with hot water using energy B, getting in my car driving to recycle center using energy C, have it shipped to a recycling plant,other side of the world or whatever. Using energy from oil.

Material for paper can be made co2 neutral. Organic waste , sure just compost it.

Metalls yea here we have something we're it's great to recycle.

Glass. Meh just heated sand. But old glass needs to be disposed of somewhere so yea it is a point in recycle it.

What should be done is reuse and not using materials I can't recover energy at home from. That's a heck of a lot better.

Glass bottles that gets refilled is a great example. Other good ideas is to repair instead of throwing a way stuff. True for everything from cars to laptops.

From a capitalists view recycling means (to a certain extent) free labour, raw materials and transports.

Reusing and repairs means less sales.

Glass. Meh just heated sand.

Wish it was that simple, but glass & construction-grade silica sand are actually becoming somewhat scarce and facing shortages. The composition, purity, and grain size make some sand vastly more desirable than other sand. There's already commercial operations grinding down quartz slabs, because thats easier than trying to sieve and process out all the non-quartz grains.

And sand-dredging operations arent any less damaging to the enviroment than other strip mining methods even where there are good deposits. Glass recycling is good.

Ok thanks did not know that. But let's compare this against a better approach.

Energy use for recycling

Vs reuse

Standardized bottle and jar sizes with pant ( not sure about en word, you pay a bit extra and get it back when returned) that instead of being melted down get a cleaned and reused.

Sweden had a good system that's still works but not as good as it use to ( some types was removed and different sizes in use) but still have it for 33 cl bottles, as an example.

South Korea mostly burns it or turns it into fuel (to be burned later)

People who think Trump or Biden has their best interest in mind vs me.

I was gonna write something political but nah.

Pokemon Mystery Dungeon are some of the best Pokemon games, better than most of the (especially newer) main series games. I started with Pokemon Mystery Dungeon so I may be biased though.

I believe this would be the opinion of the mass of people in the picture, not the single guy :)

At the time the first games were out, PMD felt like they were the only games in the Pokemon franchise that actually tried to build a world out of the lore that Game Freak made for its own monsters. Read the Pokedex entries in any of the first three generations. They're fantastic, but they don't seem to tie into the actual games themselves. A lot of them are strangely disconnected flavor text that hint at mannerisms, abilities, or feats that simply do not translate to what the mons are like in gameplay. The fine lads at Chunsoft were apparently the only ones who bothered to read that flavor text and think, "Hey, we can make something great with this." And holy shit did they ever. Several times.

The metric system should be redone in base 12, and RPN should be the norm for teaching arithmetic.

See elsewhere in the thread, but basically because of the ease of dividing whole numbers.

twelve phalanges makes four digits, use the thumb to count. Also prettier.

Yes. Also everyone should be required to learn how to use a slide rule before they ever get given a calculator - I think that seeing how the numbers relate to each other on a physical device can help students conceptualize them better.