Lmao. Shit, really?!

I'm no expert but I'm pretty sure computers can't organically learn racism.

That was programmed in by somebody right?

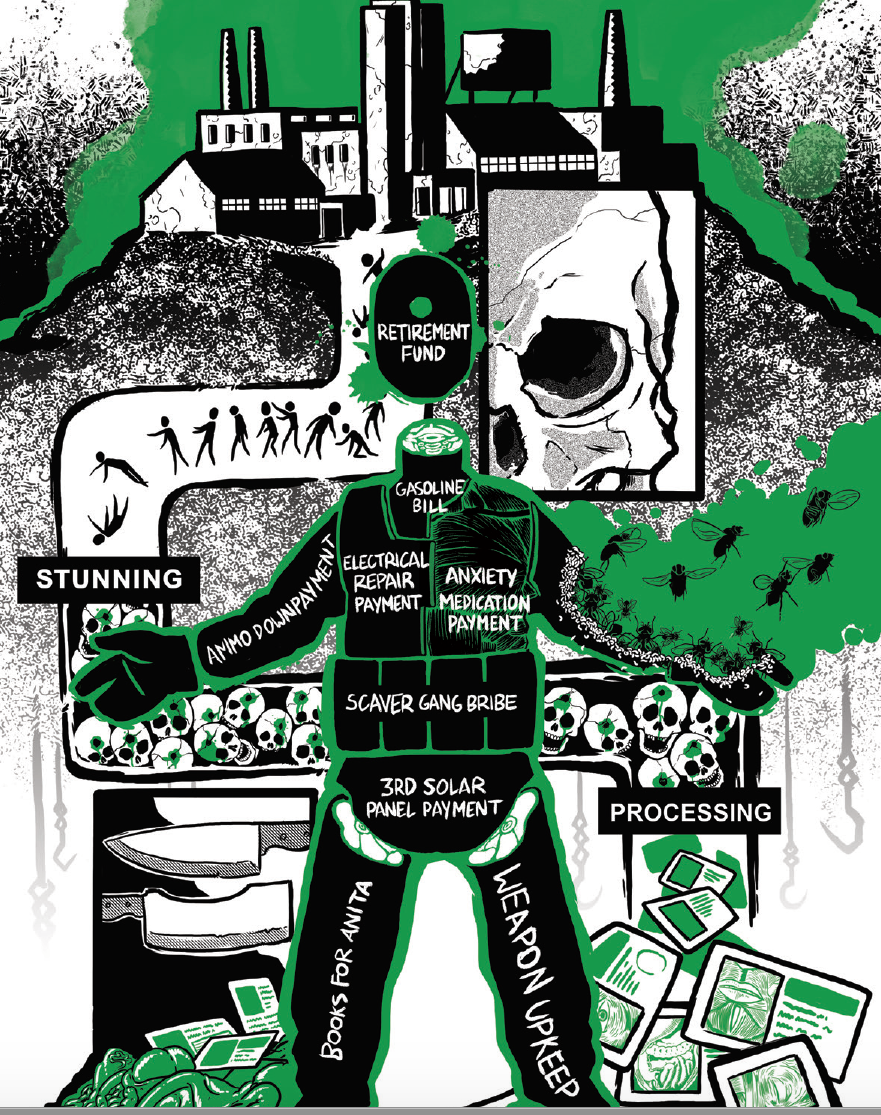

Run anti shoplifting pilot programs in stores with high shrink

They're in poor neighborhoods

Poor neighborhoods have more minorities

Computer thinks poor people are thieves

???

Profit

🤖 POOR SHOPPERS ARE JUST AS TALENTED AND BRIGHT AS WHITE SHOPPERS

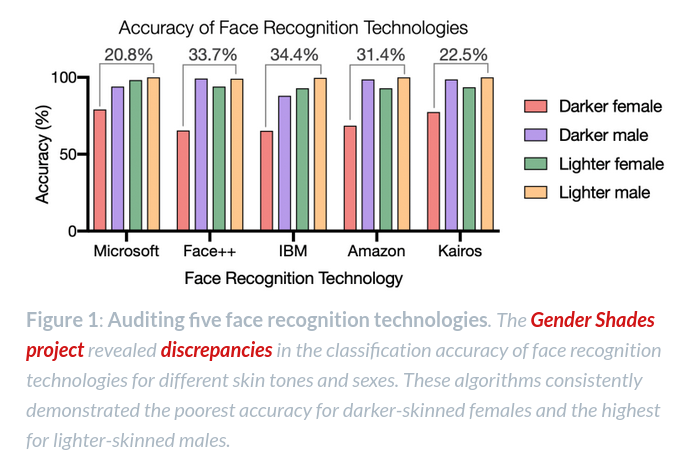

People accidentally program computers to be racist all the time. It’s a perpetual problem in AI and image recognition.

Not that I’m going to go out in a limb protecting the people making the “likely shoplifter” AI. Everyone knows what results their bosses want.

It's definitely just the thing where poor people are more likely to steal but women and minorities are poorer than white people so minorities/women are more likely to steal than white people before adjusting for income level

What probably happens with those facial recognition programs is that the people who make them neglect actually training them at recognizing poc (they use tiny sample sizes compared to white people), which causes the program to work as if everyone who is not white is the same person.

Show

They're gonna end up having to do the thing from movies and video games where the camera actively aims at people and shoots infrared light to do a LIDAR scan of people's faces to process. And it's gonna be so slow that they will install checkpoint gates where you have to stand there while it scans your face. And they're gonna have an AI robot lady voice (so they don't pay any royalties) say beep boop you're clear to go.

Just as systemic racism isn't the result of one individual with bad intent that could be taken out to fix it, AI isn't any different than any other bureaucratic tool. They get trained on racist data, but the very goal of going after "likely shiplifters" is a racist end. Anyone that works retail knows how often managers are calling over radio asking you to keep an eye out for a black guy wearing a shirt. AI isn't really introducing new racism where less existed before, it just creates an excuse for the racism that it was totally the computer and not vague guidelines about "suspicious activity" we get told to look for with the complete understanding they mean black people who park too close to the store.

Same stuff as having a million reasons cops are allowed to stop you that are all just a synonym for "you're black". Everything in this country is some form of beating around the bush in terms of stated reasoning.

They can't even try to argue based on crime stats since Asian people have very low rates of conviction in most crime stats.

Crime stats are obviously bullshit, but this AI is too shit even for crime stats.

Nothing like a computer illiterate jackass getting a permission slip from a computer to go be racist, so the store can pretend the massive humiliating scene they caused was entirely the fault of not reading the computer right.