reading AI generated articles makes me feel like I am having a stroke. that's a win for pharmaceutical investors and ambulance owners.

One of the news orgs run by Microsoft I think has seemingly converted to 100% ai articles and every time I open one I feel like I’m reading in a dream lol

Sort of

Some hospitals have their own fleets of ambulances, but many others use private ambulance companies

Both will add about $3,000 onto a medical bill if they're used

They used to have this feature pre LLM... and it worked better. =/

I think they offloaded the work to an LLM and are overly confident so there are fewer consistency checks.

Not how I pictured coy from the books tbh but we’ll see what she brings to the role

Personally, I loved Patrick Stewart as coy in the David Lynch one but let see how it goes in the new one

Rendering decades of information science useless in the pursuit of firing as many people as possible

Great job!

The AI pulled search results have gotten out of hand and are so much worse than whatever they used to pull results like that before.

I feel like “what character is x playing in y movie” is such a common googled question that it should have a specific list of websites it’s allowed to pull from when it’s given that question. IMDB gives it to you as an easy to read list which I think historically is what Google pulled from. Just make it only check that list.

In the 3rd edition of the Cyberpunk TTRPG, the Net became unusable and history was destroyed due to the mass dissemination of a worm which corrupted all human knowledge

We're rapidly getting to this but shittier, just our luck

The questions section in Google results seems to have gotten significantly worse.

Another example: it told me "the Raid Redemption" was the sequel of the Raid (it's the US release name of the same movie).

So these things where AI blatantly lies about something go around pretty frequently, and if you google them 2 months later they still haven't done anything about it. They're bound to be forced to do something legally sooner or later, so why not just hire like 20 people to y'know, do the bare minimum now before you're ordered to employ 1000? It seems pretty likely that's where this is headed.

One time I asked an AI for all the video games where health was illustrated by hearts. It told me legend of Zelda which is true but then it said kingdom hearts which is definitely a green bar. If I can stumble across blatant lies because my dumbass was talking to someone in a dating app, how the fuck could someone invest millions upon millions of dollars gambling on whether a LLM is going to give you accurate information without asking it something first?

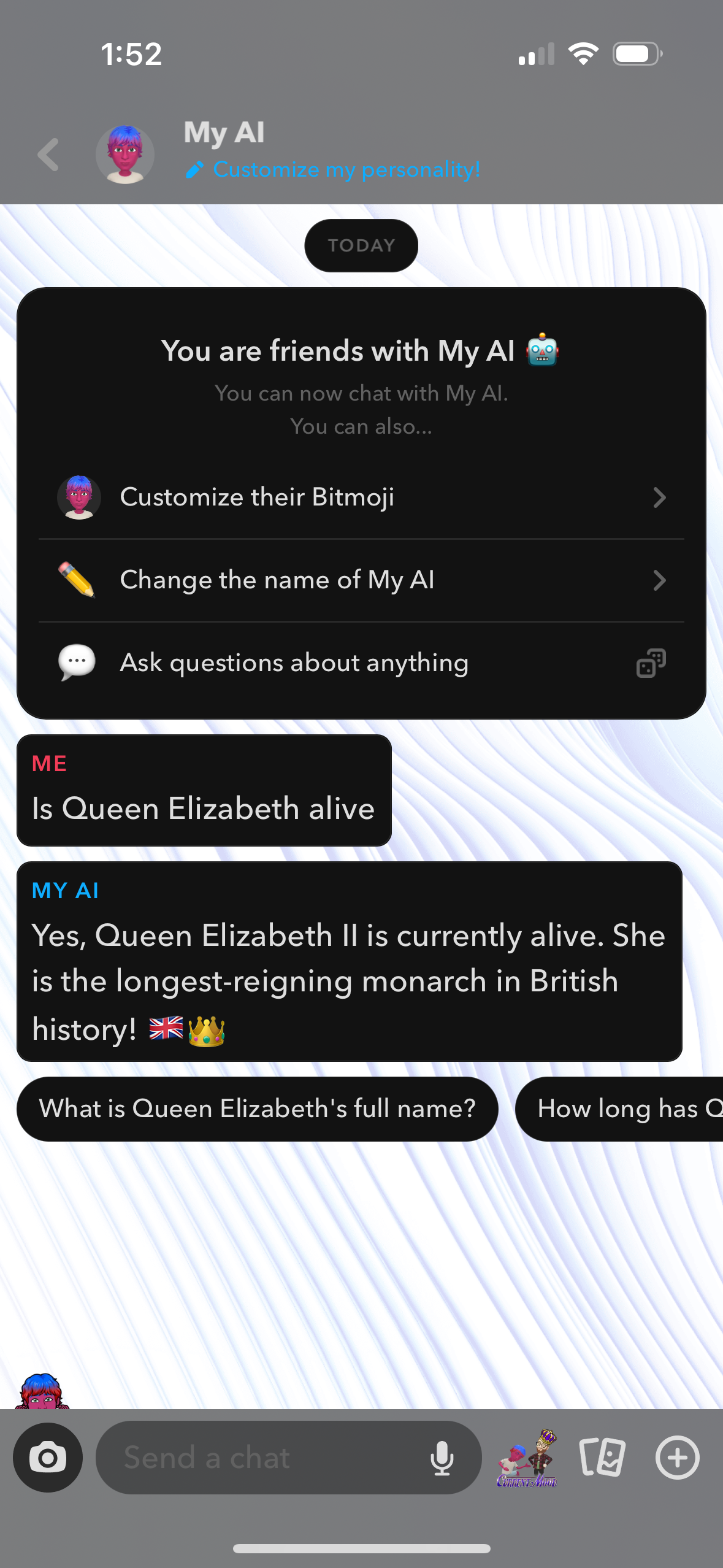

I asked the very annoying Snapchat AI this question just now:

Show

for AI this is surprisingly coherent and literal. But dumb, ofc. I guess this is because its probably just good old fashioned machine learning not an LLM

From my limited understanding of the series, this means the chances she plays Duncan Idaho is more than half. So many characters are Duncan Idaho

who did geena davis play in a league of their own, answer returned in 0.082sec it's BASEBALL