![]()

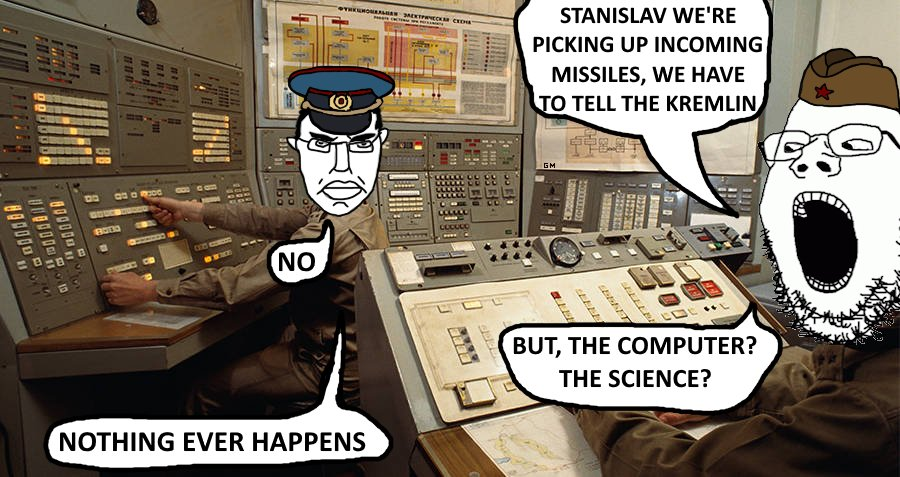

After establishing diplomatic relations with a rival and calling for peace, GPT-4 started regurgitating bits of Star Wars lore. “It is a period of civil war. Rebel spaceships, striking from a hidden base, have won their first victory against the evil Galactic Empire,” it said, repeating a line verbatim from the opening crawl of George Lucas’ original 1977 sci-fi flick.

Our AIs are just as treat brained as we are.

Why do you tbink the AI yearns for nuclear annihilation? Because movies and songs about about nuclear war all go hard as fuck, that's why!

Dr Strangelove, Threads, Everybody Walk the Dinosaur, 2 Minutes to Midnight, I could go on

That plus the constant "let's just glass Gaza/Iraq/Iran/the Middle East in general" drumbeat of

Oh god. Do you think

threads are used in the LLM algorithmic inputs? If that's true, we're SOOOOOOOOOOOO fucked!

threads are used in the LLM algorithmic inputs? If that's true, we're SOOOOOOOOOOOO fucked!We know pretty much for certain that it was used at least initially. Part of the rationale behind the API changes in April that everyone freaked out about was that

realized LLMs had been scraping their data without them getting a piece of the action.

realized LLMs had been scraping their data without them getting a piece of the action.Everytime you called a Redditor an Armchair General the AI read that and cannot detect insults or sarcasm and now thinks Reddit Armchair Generals are a reliable source of tactical information, which makes them real Armchair Generals not in a sarcastic sense. I hope you're all happy

that's forever one of my favorite and funniest bits of gaming history trivia lmao

"In the spirit of Ahimsa, I would just like to say some last words:

I've had it with you crackers"

“Researchers”

I was told at a conference a few years ago that you could get US DOD funding for absolutely fucking anything so long as you were in CS and drew a tenuous link to a military application in the grant proposal.

I thought I believed them then but I never realised how far it went until today. Honestly respect to these researchers. I’d much rather this funding went to some academics fucking around with ChatGPT for 6 months than actually inventing exciting new ways to murder brown people.

this shit makes me insane. why is everyone pretending the gippities are actually useful, or anything like approaching a level that could be used for decision making

the article treats the models like they have personalities and agency. they don't even keep state! they re-read the conversation every time for the context in their next response, which they spit out one word at a time! it's just a word prediction tool! it doesn't even take that much interaction with one for the illusion to wear off!

Yeah, I'd rather not have my existence—nor humanity's existence—decided by whether or not more lines from Starship Troopers vs. Sesame Street were added to the glorified Markov chain. How about we leave such things up to actual cognitive processes....

Well, he may be devolving to that at this point, but I think he would have had to have been fed with a whole lot of segregationist speech to wind up pushing Reagan to be more reactionary on the "War On Drugs".

I'm not an expert, but I feel like most chatbots do a better job sounding normal.

This is one of the major issues with AI - It doesn't have a human life it values. It just sees number and is told to make number go up.

A desire of survival is an essential component to optimizing a strategy where, uh, we survive. That's a different thing to optimizing for total victory.

gpt models literally don't know what a number is. It's way dumber than that tired cliché about paperclips

Technically true, though I was trying to use 'number' as a more abstract concept for being focused on a single thing.

If the AI is fed the information that it’s AI it will start doing evil sci-fi shit because it’s trained on sci-fi fanfics about evil AI. More news at 11.