https://www.businessinsider.com/student-uses-playrgound-ai-for-professional-headshot-turned-white-2023-8

Chinese surname

first name Rona

This young woman has already been through hell, I just know it.

Even before COVID she shared a name with a brigade of Russian fascists who joined the SS.

I am once again begging Asian parents to google the English names they choose for their kids.

Women have been commonly named Rona since long before that was a thing, and for a long time since. The native acronym of that group is a very niche thing to be aware of.

although to be fair almost no one has heard of them you aren't going to get bullied on the playground for sharing a name with an obscure regiment from a war 70 years ago

also Rona is a Scottish name

Rona is a Canadian hardware store so I didn't get what you meant at first

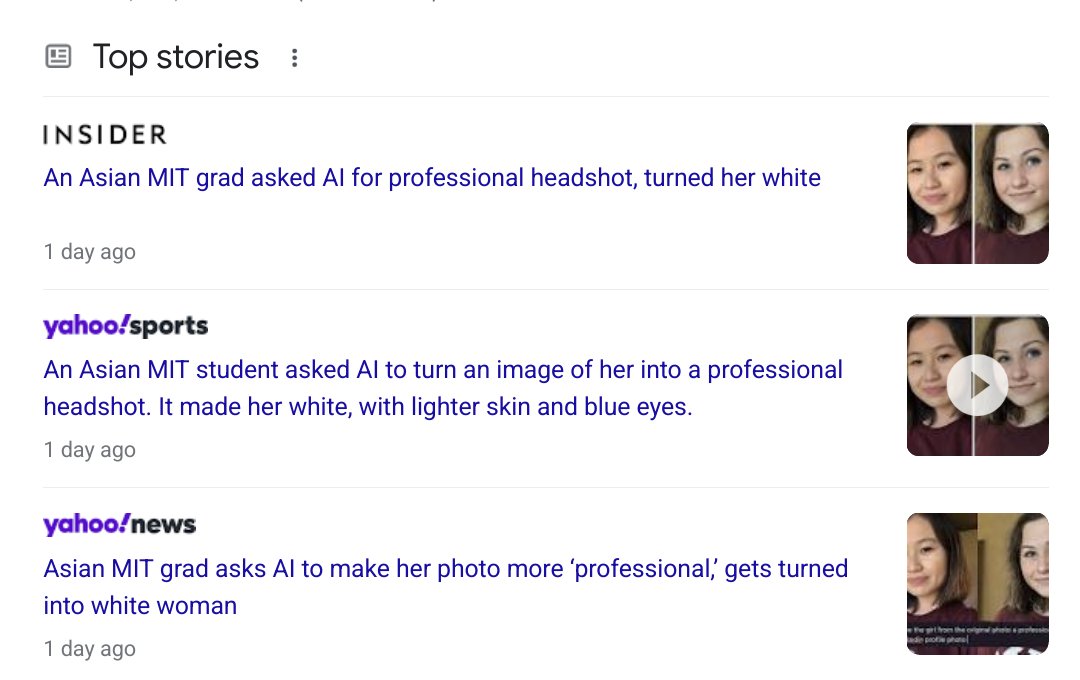

Completely off topic but I kinda hate this style of headline. "They wanted X. Then Y happened." Whatever happened to the art of the concise headline?

"AI turns Asian student white when asked to make her photo more professional"

short, declarative sentences are more attention grabbing than a single longer sentence, even when they're conveying the same info. the headline is punchier than your version, though I'm not sure that's a good thing.

It's weird because the headline Google serves for the same article is closer to mine. I guess once the link's been clicked they don't need to worry about info/length economy as much.

Yahoo even has one of each type, for whatever reason

Show

Gotta put the main details in the headline because that's all most people read.

Reminds me of the time Google's image recognition AI kept identifying photos of black people as various kinds of apes.

Like okay experimental technology and all, but how did your fucking multi billion dollar company not test your shit with any pictures of black people?

same way the entire pharmaceutical industry oopsied and forgot to test anything on cis women

Kodak only developed (no pun intended) the ability to show brown/black skin after furniture makers had a hard time photographing their stock for catalogues

dae think the world would be way better if we just handed political power over to the apolitical neutral and uncorruptable computer bc humans are just too imperfect

I love how it did literally nothing else besides make her white. it's the Robot that Screams Slurs.

train model on pictures of white people

model generates pictures of white people

If all your references are of linkedin ghouls pfps(mostly white), making the bot make your picture more like that does repeat societal biases.

linkedin ghouls

"I knew when I was 12 years old that I had to start building my resume if I wanted to become president" - Pete Buttigieg :pete:

Better hold a focus meeting on how to tell people how to pronounce my name so I can be more employable

what does "AI, turn me into a professional headshot" even mean?

i am not saying "make her white" is the thing it should have done, but, what did she expect it to do

Make the lighting and background look more professional probably, I think a lot of these AI models to give you a headshot are supposed to make it look like you're wearing a suit or something too

A "headshot" is a sort of professional picture portrait one would use on their LinkedIn profile. I believe the term is more common in theater spaces. The shot on the left would have worked fine to be honest

She probably wanted to turn the basic selfie into something that more closely resembles a professional studio portrait in terms of lighting and composition.

yeah so like they took a thing that was what they wanted and then told the AI to do more of it? so idk what she expected to happen

I will continue being polite to AI when I have to interact with it. For one, nothing wrong with a bit of extra politeness. Two, when the robot overlords eventually do gain sentience I want to be on the good list and end up as like, a warlordbot’s pet instead of in the mines.

Okay so this is gross but it says a lot more about hiring culture than it does about this specific piece of software. The thing ran the numbers and said "you'd have a better chance of getting this job if you were white" - not an unreasonable conclusion given the systemic nature of racism.

The scarier issue is that these biases are definitely going to be ingrained into whatever LLM software our bosses are going to use to make hiring decisions. But then like, it's their hiring decisions that the machines are trained on... The first generation is just parroting corporate America's racism.

So my question to the people who actually know AI is this: will the algorithms get more racist or less racist as they iterate upon themselves? Assuming the software is eventually using its own hiring decisions as a data set, is there any way it could lead to these human-borne biases slowly being trained out due to law of averages or are we just going to see more weirdly specific and highly optimized configurations of racism?